Benchmarking Large Language Model

A Comprehensive Overview

Large Language Models (LLMs) are sophisticated AI systems capable of generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. To evaluate their performance and identify areas for improvement, researchers and developers rely on benchmarks.

What is an LLM Benchmark?

An LLM benchmark is essentially a standardized yardstick used to measure a model’s capabilities. It consists of a carefully curated dataset of tasks or questions, along with predefined evaluation metrics. By subjecting an LLM to these benchmarks, we can quantitatively assess its performance on various dimensions.

Key Components of an LLM Benchmark

Dataset: A collection of diverse data points, ranging from simple factual questions to complex reasoning problems.

Tasks: A set of challenges designed to test specific LLM abilities, such as question answering, summarization, translation, or code generation.

Metrics: Quantitative measures to evaluate model outputs, including accuracy, precision, recall, F1 score, perplexity, BLEU, and ROUGE.

Scoring Mechanism: A system to assign a numerical score based on the model’s performance on the given tasks and metrics.

Types of Benchmarking Approaches

Zero-shot: The model is presented with a task without any prior examples, testing its ability to generalize knowledge.

Few-shot: The model receives a small number of examples before tackling the task, assessing its learning from limited data.

Fine-tuned: The model is trained on a dataset similar to the benchmark to optimize its performance on specific tasks.

The Role of Benchmarks in LLM Development

Model Evaluation: Benchmarks help identify strengths and weaknesses of LLMs, guiding improvement efforts.

Progress Tracking: By comparing performance over time, benchmarks monitor model development and evolution.

Fair Comparison: Benchmarks provide a standardized framework for comparing different LLMs.

Research Catalyst: Benchmarks stimulate research by defining challenging problems and evaluation criteria.

Benchmark Comparison

General Capabilities

The MMLU benchmark measures model’s multitask accuracy. It covers 57 tasks including elementary mathematics, US history, computer science, law, and more at varying depths, from elementary to advanced professional level.

Top 5 Models in General Capabilities (MMLU)

| Model | Provider | MMLU Score (%) |

| GPT-4o | OpenAI | 88.7 |

| Llama 3.1 405b | Meta | 88.6 |

| Claude 3.5 Sonnet | Anthropic | 88.3 |

| GPT-4 Turbo | OpenAI | 86.5 |

| GPT-4 | OpenAI | 86.4 |

Coding

HumanEval is the most used benchmark to evaluate code generation task performance. It has 164 handwritten programming problems that evaluate for language comprehension, algorithms, and simple mathematics, comparable to simple software interview questions.

Top 5 Models in Coding (Human Eval)

| Model | Provider | HumanEval Score (%) |

| Claude 3.5 Sonnet | Anthropic | 92 |

| GPT-4o | OpenAI | 90.2 |

| Llama 3.1 405b | Meta | 89 |

| GPT-4o mini | OpenAI | 87.2 |

| GPT-4 Turbo | OpenAI | 87.1 |

Reasoning

🧠 GPQA is benchmark designed to evaluate the reasoning capabilities of LLMs. It has 448 multiple-choice questions across the domains of biology, physics, and chemistry, crafted by domain experts to ensure high quality and difficulty.

Top 5 Models in Reasoning (GPQA)

| Model | Provider | GPQA Score (%) |

| Claude 3.5 Sonnet | Anthropic | 59.4 |

| GPT-4o | OpenAI | 53.6 |

| Llama 3.1 405b | Meta | 51.1 |

| Claude 3 Opus | Anthropic | 50.4 |

| GPT-4 Turbo | OpenAI | 48 |

Math

🧮 The MATH benchmarks evaluates LLM models on math tasks. It contains 12,500 challenging math problems, each with step-by-step solutions which can be used to teach models to generate answers and explanations.

Top 5 Models for Math (MATH)

| Model | Provider | MATH Score (%) |

| GPT-4o | OpenAI | 76.6 |

| Llama 3.1 405b | Meta | 73.8 |

| GPT-4 Turbo | OpenAI | 72.6 |

| Claude 3.5 Sonnet | Anthropic | 71.1 |

| GPT-4o mini | OpenAI | 70.2 |

Tool Use

🛠 (BFCL) is the first evaluation on the LLM’s ability to call functions and tools. It includes 2k question-function-answer pairs in various languages, covering diverse domains and complex use cases with multiple or parallel function calls.

Top 5 Models for Tool Use (BFCL)

| Model | Provider | BFCL Score (%) |

| Claude 3.5 Sonnet | Anthropic | 90.2 |

| Llama 3.1 405b | Meta | 88.5 |

| Claude 3 Opus | Anthropic | 88.4 |

| GPT-4 | OpenAI | 88.3 |

| GPT-4 Turbo | OpenAI | 86 |

Multilingual Capabilities

🗣 MGSM evaluates the reasoning abilities of LLMs in multilingual settings. A total of 250 problems from GSM8K (another math benchmark) are each translated via human annotators in 10 languages.

Top 5 Models for Multilingual Capabilities (MGSM)

| Model | Provider | MGSM Score (%) |

| Claude 3.5 Sonnet | Anthropic | 91.6 |

| Llama 3.1 405b | Meta | 91.6 |

| Claude 3 Opus | Anthropic | 90.7 |

| GPT-4o | OpenAI | 90.5 |

| GPT-4 Turbo | OpenAI | 88.5 |

Speed Comparison

🚀 In this section we compare all open-source and proprietary models on Latency (Seconds to first tokens chunk received) and Throughput (Tokens per second).

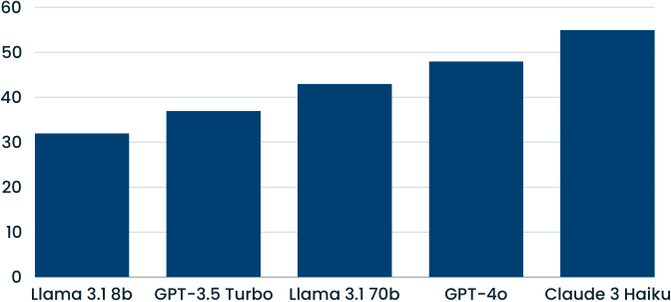

Latency(Lower is Better)

| Model | Provider | Latency(s) |

| Llama 3.1 8b | Meta via Groq | 0.32 |

| GPT-3.5 Turbo | OpenAI | 0.37 |

| Llama 3.1 70b | Meta via Groq | 0.43 |

| GPT-4o | OpenAI | 0.48 |

| Claude 3 Haiku | Anthropic | 0.55 |

| GPT-4o mini | OpenAI | 0.56 |

| Llama 3.1 405b | Meta via DeepInfra | 0.59 |

| GPT-4 Turbo | OpenAI | 0.60 |

| GPT-4 | OpenAI | 0.64 |

| Gemini 1.5 Flash | 1.06 | |

| Gemini 1.5 Pro | 1.12 | |

| Claude 3.5 Sonnet | Anthropic | 1.22 |

| Claude 3.5 Opus | Anthropic | 1.99 |

Throughput (Higher is better)

| Model | Provider | Throughput(s) |

| Llama 3.1 8b | Meta via Groq | 723 |

| Llama 3.1 70b | Meta via Groq | 230 |

| Gemini 1.5 Flash | 166 | |

| Claude 3 Haiku | Anthropic | 133 |

| GPT-4o mini | OpenAI | 97 |

| GPT-3.5 Turbo | OpenAI | 84 |

| GPT-4o | OpenAI | 79 |

| Claude 3.5 Sonnet | Anthropic | 78 |

| Gemini 1.5 Pro | 61 | |

| GPT-4 Turbo | OpenAI | 28 |

| Llama 3.1 405b | Meta via DeepInfra | 27 |

| GPT-4 | OpenAI | 25 |

| Claude 3.5 Opus | Anthropic | 25 |

Context Window Comparison

Context Window Size per Model

| Model | Provider | Context Window(Tokens) |

| Gemini 1.5 Pro | 20,00,000 | |

| Gemini 1.5 Flash | 10,00,000 | |

| Claude 3 Opus | Anthropic | 2,00,000 |

| Claude 3.5 Sonnet | Anthropic | 2,00,000 |

| Claude 3.5 Haiku | Anthropic | 2,00,000 |

| GPT-4o | OpenAI | 1,28,000 |

| GPT-4 Turbo | OpenAI | 1,28,000 |

| GPT-4o mini | OpenAI | 1,28,000 |

| Llama 3.1 8b | Anthropic | 1,28,000 |

| Llama 3.1 70b | Anthropic | 1,28,000 |

| Llama 3.1 405b | Anthropic | 1,28,000 |

| GPT-3.5 Turbo | OpenAI | 16,400 |

| GPT-4 | OpenAI | 8,000 |

Limitations of LLM Benchmarks

While invaluable, benchmarks have limitations. They often focus on specific tasks and may not fully capture real-world performance. Additionally, they can be susceptible to overfitting, where models excel on benchmark data but struggle with unseen examples. Human evaluation remains essential to complement quantitative metrics and assess qualitative aspects like coherence and fluency.

The Future of LLM Benchmarking

As LLMs continue to advance, so must benchmarking methodologies. There is a growing need for benchmarks that evaluate more complex reasoning, creativity, and common sense. Moreover, developing benchmarks for specific domains, such as healthcare or finance, will be crucial for ensuring the safe and reliable deployment of LLMs in these critical areas.

By understanding the principles of LLM benchmarking and its limitations, we can better assess the capabilities of these powerful models and drive responsible AI development.

“These stats and numbers have been taken from the Vellum.ai LLM Leaderboard Report”.