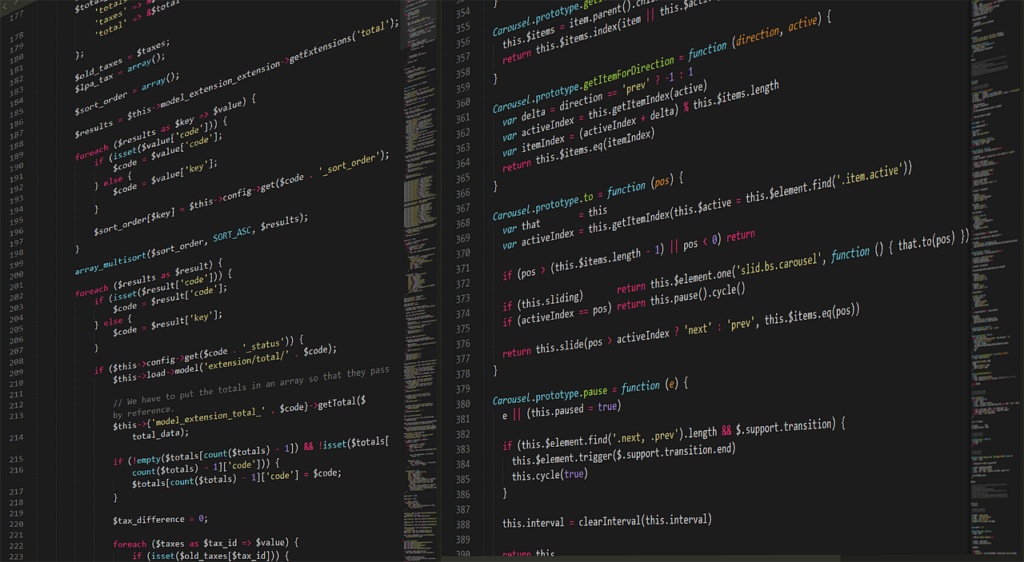

In computational linguistics, coding, or computer programming, refers to the construction of instruction sequences or programs that orchestrate the behavior and performance of computers or applications. These programs contain a series of well-defined actions to be executed by the central processing unit (CPU). Programmers or coders wield a diverse arsenal of high-level programming languages.

However, mastery of a programming language is just the tip of the iceberg. A truly skilled coder possesses a multifaceted knowledge base. This includes domain-specific expertise, linguistic dexterity, algorithmic knowledge and logical acumen. Software development encompasses activities like analyzing requirements, testing & debugging, build system implementation, and derived artifact management. While programming i.e, writing & editing code is core, software development encompasses the entire lifecycle.

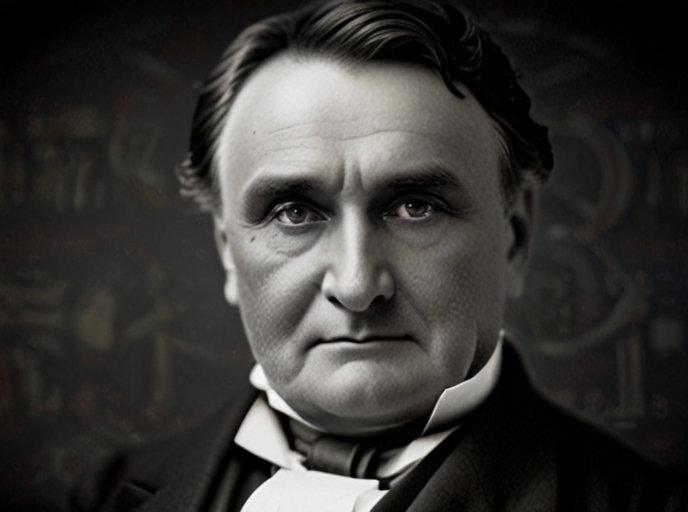

Ada Lovelace, the daughter of the famous poet Lord Byron, was an English mathematician and writer. In her extensively-labeled notes, she described an algorithm for Charles Babbage’s general-purpose computer, the Analytical Engine to compute Bernoulli numbers. It is considered to be the world’s first-ever published algorithm and Ada Lovelace has often been cited as the world’s first computer programmer.

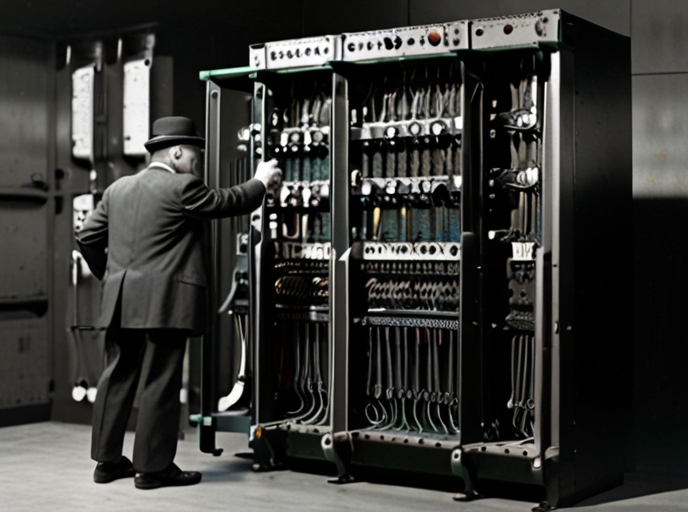

The Jacquard loom utilized a chain of cards punched and laced together in a sequence. Each set of cards programmed different patterns in the fabric leading to the automation of the weaving and textile industry. The punched card of the Jacquard loom worked on a binary system, where a punched hole represented 1 and absence of a hole represented 0. This laid the groundwork for binary code used in computer programming. In 1944, IBM used paper tapes that operated on similar lines to the punched cards of a Jacquard loom.

In the early 1940s Konrad Zuse created Plankalkül which is considered the first high-level programming language designed for a computer. It was mainly created for engineers to carry out routine and repetitive tasks efficiently and quickly. In the 1940s and 1950s, early computers like ENIAC and UNIVAC did not have a centralized processor. These early machines were more like electrical panels. Instructions were “wired-in” through a complex network of switches and cables.

Programs written for one machine wouldn’t work on another due to the fundamental differences in hardware architecture and instruction representation. Rewriting code for each different computer significantly slowed down the development process. The invention of assembly language and, later, standardized ISAs helped bridge the gap. These advancements provided a more human-readable representation of machine code and ensured a level of portability across different machines. This shift marked a turning point in the history of computing, making programming more accessible and laying the foundation for the diverse software ecosystems we see today.

The 1950s saw a revolution in programming with the rise of high-level languages like FORTRAN, COBOL, and LISP. These languages allow programmers to write instructions that resemble natural language or mathematical formulas. In the 1960s and 1970s, the programming landscape saw the rise of two influential languages: Pascal and C. Pascal, designed by Niklaus Wirth, emphasized structured programming with control flow constructs like loops and conditionals. C, developed by Dennis Ritchie, offered a balance between high-level abstraction and low-level control. Both languages, with their distinct strengths, laid the groundwork for the development of modern programming paradigms.

The 1970s saw the personal computer boom alongside the development of user-friendly languages like BASIC. These languages lowered the barrier for beginners to interact with computers. The 1980s ushered in a new era with Object-Oriented Programming (OOP) languages like C++ and Java. These languages introduced concepts like classes and objects, which became fundamental for building complex software applications.

The late 20th and early 21st centuries witnessed the web’s rise, spawning languages like HTML (structure), CSS (styling), and JavaScript (interactivity) to build dynamic web experiences. Python’s versatility flourished in this era, finding uses in web development, data science, and general-purpose programming due to its readability and extensive libraries.

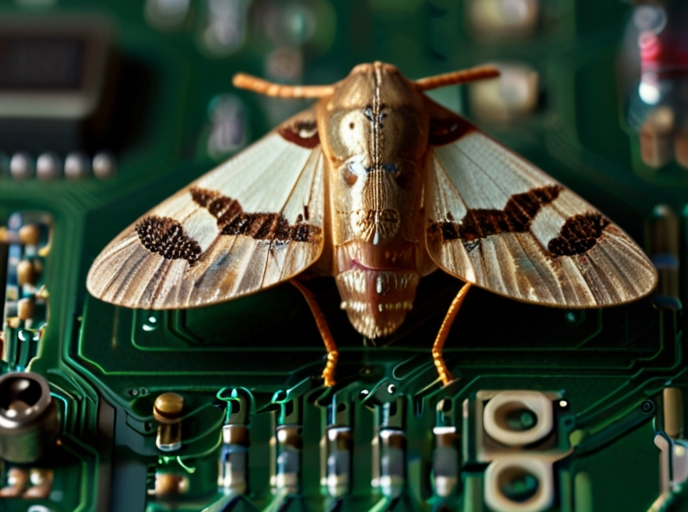

The Urban Legend of the Bug!

The story of the moth in the ENIAC computer is an interesting mix of truth and legend.

The Culprit Moth: In 1947, a team at Harvard University was working on the ENIAC. They encountered a mysterious malfunction and traced it to a moth stuck inside the machine’s complex circuitry. Grace Hopper, a computer scientist and rear admiral in the U.S. Navy, was part of the team that found the moth. They carefully removed it and documented the incident in the logbook, even taping the moth to the page with the note “First actual case of bug being found.” This logbook is now a historical artifact. While the term “bug” to describe malfunctions existed before, Grace Hopper’s experience likely popularized the term within the computer science community. The fact that a real, physical bug caused a computer problem made the term quite fitting!

The Invisible Code that Powers Our Digital Universe!

Imagine a vast, intricate network: websites, apps, and interconnected systems, the backbone of the digital universe we navigate daily. This invisible world is built and maintained by the language computers understand: code. These constructs form the invisible backbone of the internet, facilitating data transfer, communication networks, and cloud storage.

Programmers, like digital architects, use code to create the software, applications, and the operating systems that power it all. This enables instant communication between people and systems, effectively connecting our planet as a single, digital globe.

Code also connects devices, networks, and services, forming the very foundation of our interconnected digital world. Protecting this digital realm is crucial. Coding plays a vital role in developing robust security measures to safeguard the ecosystem from cyber threats. It also contributes to effective data management, enabling the storage, retrieval, and utilization of the vast amount of information flowing through it.

Coding is the global language for a global world. The influence of coding and programming transcends geographical boundaries. Online learning platforms and educational software, built through code, provide accessible education opportunities on a global scale. Programming even supports scientific research by enabling simulations, data modeling, and the processing of complex computations. The constant evolution and expansion of our digital ecosystem is driven by advancements in coding and programming. As these practices continue to innovate, so too will the digital universe we interact with every day.

Welcome GenAI: A Powerful Ally for Coders

Get ready for a revolution in coding! Generative AI (GenAI) has arrived, offering programmers a game-changing boost. It offers assistance to programmers by automating repetitive tasks, generating code snippets, completing functions, and even creating entire applications based on natural language prompts.

But GenAI goes beyond just writing code. AI-powered tools can analyze your code for potential errors, optimize its performance, and ensure it follows best practices. This translates to higher quality, more reliable software. Development gets a significant speed boost too. GenAI tackles the mundane tasks, freeing programmers to focus on complex logic and innovation.

Learning to code can be daunting, but GenAI lends a helping hand. It can suggest code snippets for beginners, explain their functionality, and provide guidance, making programming more accessible than ever. GenAI fosters better teamwork by offering real-time code suggestions and ensuring consistency across projects. It can even generate comprehensive documentation, making complex systems easier to understand and maintain.

Get ready for a personalized coding experience! GenAI tailors the development environment to your coding style and preferences. Need help cracking a tough coding problem? GenAI can analyze vast amounts of data and past code examples to suggest innovative solutions. Don’t wait for problems to arise. GenAI can predict potential issues and maintenance needs in your code, allowing for proactive fixes. Plus, it integrates seamlessly with DevOps pipelines, automating testing, deployment, and monitoring for a smooth development process.

In short, GenAI is transforming the coding landscape. By automating tasks, improving code quality, and empowering programmers, it’s paving the way for a future of faster, more efficient, and innovative software development.

Humans vs. AI: Self-Coding and Program Creation

GenAI offers assistance to programmers by automating repetitive tasks, writing code snippets, and even creating basic programs based on instructions. However, current AI struggles with true creativity and independent problem-solving, limiting its ability to code complex programs from scratch. GenAI improves by analyzing vast amounts of existing code, but this means it can only generate variations on what it’s already seen.

Enter tools like GitHub Copilot. It analyzes your code and context, suggesting relevant solutions and speeding up development. Copilot can even understand your intent and generate entire functions or refactor existing code. It can also answer your questions about specific code or APIs you’re using. OpenDevin takes things a step further. This AI platform not only suggests code but can also write entire sections, fix bugs, and even help ship features. GPT Engineer leverages the capabilities of large language models to understand natural language descriptions. Describe the functionality you want, and it generates the code to get you started. Simple websites and programs can be created with minimal human interaction through the use of these tools.

However, it’s important to remember that AI coding require human oversight and input. AI-generated code needs careful review to avoid biases or security vulnerabilities present in the training data. While AI automates tasks, human skills like critical thinking, creativity, and problem-solving remain irreplaceable. In the future, programmers will likely leverage AI tools for efficiency, while providing guidance and creative direction. As AI and programming languages continue to develop, the potential for self-coding AI in the distant future remains an open question, prompting ongoing research and discussion.

Coding’s Future

A Sci-Fi Script or Collaborative Symphony?

In the not-so-distant future, coding might resemble a scene ripped from a sci-fi flick: AI writes its own code, leaving humans to grapple with the chilling possibility of becoming obsolete. But what does this mean for the future of coding, jobs, and society as a whole? Will AI ever code like a rockstar developer, churning out flawless masterpieces on its own? Maybe in the distant future, when AI achieves sentience and starts writing existential video games.

The idea of self-coding AI conjures images of Skynet from Terminator, a system that not only codes itself but also evolves beyond human control. While such a scenario is still far-fetched, advancements in AI continue to push the boundaries. Researchers are exploring ways for AI to learn and adapt more independently, potentially leading to self-coding systems in the future.

Jobocalypse or Jobotopia?

Here’s the real shocker: GenAI might not steal your job entirely, but it will definitely change it. Programmers who can adapt and work alongside AI will be the real superheroes of the future. Think Neo dodging bullets in the Matrix, but with lines of code instead. So what will the future of coding be?

The future of coding with GenAI could go either way. It could be a dystopian nightmare where AI dominates and subjugates humans, or it could be a utopian symphony of human and machine collaboration, creating software that solves the world’s problems and makes it a better place.

The future of coding with GenAI is wide open. It’s up to us to write the narrative. So grab your keyboard, your favorite beverage (caffeinated or not, we’re not judging!), and get ready to code the next chapter of human innovation. Dystopia or Utopia, only time will tell.